5 Ways Companies Can Protect Themselves When AWS Goes Down

A recent wave of unexpected outages at Amazon Web Services has caused thousands of cloud architects and IT ops teams to rethink their systems recovery strategies. Logz.io’s Dotan Horovits shares with IDN proven ways to protect apps, data and careers when AWS goes down.

by Dotan Horovits, Principal Developer Advocate at Logz.io

Tags: AWS, chaos engineering, cloud, disaster recovery, disruption, Logz.io, outages,

Principal Developer Advocate

"In case of failure, your system should be able to route requests to another service instance and automatically spin up a new node."

Virtual Summit

Enterprise-Grade Integration Across Cloud and On-Premise

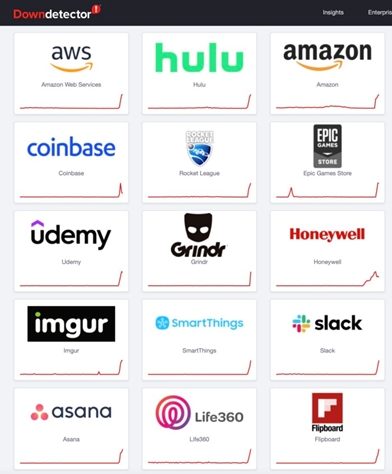

Enterprise-Grade Integration Across Cloud and On-PremiseIn the span of one month at the end of 2021, AWS reported three outages. These service disruptions impacted big name sites like Slack, Hulu, and Asana (just to name a few).

You can imagine the impact these outages had on those who rely on such popular productivity tools and communications platforms, even more so these days when most people are working remotely.

Imagine the even larger impact outages can have on companies connecting larger groups and running their mission-critical workloads in the cloud.

These recent outages raise the question, how can companies protect themselves from such unexpected service disruptions? In this article I’d like to explore battle-tested best practices for business continuity & disaster recovery (BCDR).

Design for Failure and Chaos Engineering

The first and fundamental principle in building robust architecture is to design for failure. Designing for failure from day one allows any instance or group of instances to easily recover when something goes wrong.

Source: downdetector.com

This principle should be prevalent during design, development, deployment, and maintenance stages of the system. Some companies even embed Chaos Engineering practices, simulating random failure in their work procedures to better prepare them for potential disaster events. Netflix was the pioneer with its Chaos Monkey service to get their engineering team used to a “constant level of failure in the cloud”.

Chaos Engineering essentially means running controlled experiments of how your system might break. Similarly to a scientific experiment, one would start with defining the “normal” system behavior (the steady state), with the relevant KPIs to measure that, then put a hypothesis on how the system may react to a realistic malfunction such as network jitter, dying servers or non-responsive processes. Then the experiment is run against a test group, typically in production, while keeping a control group for reference, and monitoring the defined system KPIs.

Seeing the experiment is run in production environment and may impact real users, it’s important to contain the blast radius of the experiment and extend it in gradually and in a controlled manner. DevOps teams often own Chaos Engineering in organizations, though many stakeholders are involved, such as system architects, SRE, infrastructure team and application developers.

Stateless and Autonomous Services

If possible, divide your business logic into stateless services, to allow easy fail-over and scalability. When designed right, in case of failure your system should be able to route requests to another service instance and automatically spin up a new node to replace the failed one.

To ensure your services are autonomous, make sure they are well-encapsulated, and interact with each other via well-defined APIs (no direct queries over a central databases or other “optimized” hooks please). These principles will help make sure the ripple effect of the failure is contained and a failure of one service does not take down others. If one of your services doesn’t meet that, consider breaking it down to smaller and well-encapsulated services.

It’s also advised to aggregate stateless services into homogeneous pools, which can provide not only fail-over between the pool’s instances, but also service elasticity, as the server pool can scale in real-time based on load.

Redundant Hot Copies Spread Across Zones

By replicating your data to other zones, you insulate your service from zone-wide failure. By doing so, when a failure occurs the system can retry in another zone, or switch over to the hot standby.

It’s also important to configure timeout and retry policy to avoid delays in failing over to another copy. By running multiple redundant hot copies of each service, one can use quick timeouts and retries to route around failed or unreachable services to mitigate down time.

Multi-Region, Multi-Cloud and Hybrid-Cloud Strategies

Most IT organizations avoid depending on a single ISP by having another ISP as backup. Even Amazon is using this strategy internally to ensure high-availability of their cloud by using a primary and a backup network.

Similarly, you may want to avoid dependency on a single cloud vendor by having another vendor as backup. This holds true even if the vendor provides a certain level of resilience, as we saw with Amazon’s multi-AZ (Availability Zone) failure during recent outages.

Many of the companies that survived AWS outages in US-EAST-1 (N. Virginia) region owe it to these key factors:

- Using their own data centers (a hybrid cloud model);

- Using other vendors (a multi-cloud model); or

- Using the US West Region of AWS (a multi-region model).

Within the bounds of AWS, regions provide users with a powerful availability building block, but it requires effort on the part of application builders to take advantage of this isolation.

From the API side, you need to use a separate set of APIs to manage each region. From the data side, it’s important to note that it is largely up to you to address the cross-region replication of data.

There are some AWS services that span multiple regions, but many that don’t, and you may need to take care of that via your application, especially if you deploy your own database on top of AWS EC2 instances. In that context, it would also be advisable to avoid ACID data services and leverage NoSQL solutions wherever possible, as these provide higher availability and durability (while allowing for more relaxed data consistency).

Consider dynamic balancing, regardless of the zone. When balancing equally by zone, like Amazon’s Elastic Load Balancer (ELB) does, if a zone fails this can bring down the system. Services using middle tier load balancing are able to handle uneven zone capacity with no intervention.

Automation and Monitoring

Automation is the key. Your application needs to automatically pick up alerts on system events, and should be able to automatically react to the alerts and remediate the incident. Everything from spinning up new instances, expanding clusters, backups, restoring from backups, metrics, monitoring, configurations, deployments, etc. should all be automated.

Detailed alerting mechanisms are also essential to the manual control of the system. Define your own internal alarms and dashboards to provide you with up-to-the-minute metrics on the state of your system. These metrics should span from your top Service-Level Objectives (SLOs) down to fine-grained system metrics such as request rate, CPU, and memory utilization.

Conclusion

Recent AWS outages serve as an important lesson to the IT world, and an important milestone in our maturity in using the cloud. The most important thing to do now is to learn from the mistakes made by those who went down with AWS, as well as from the success of the ones who survived it, and come up with proper methodology, patterns, guidelines, and best practices on doing it right.

Dotan Horovits is Principal Developer Advocate at Logz.io, and evangelizes on Observability in IT systems using open source projects, such as ELK stack, Prometheus, Grafana, Jaeger and OpenTelemetry. He has more than 20 years’ experience in cloud, big data solutions and DevOps solutions

Related:

- Cloud Migration Isn’t Going Away: 4 Warning Signs a Change is Needed

- Qumulo Latest Driver Improves Storage Management of Kubernetes Apps and Workflows

- Cloudflare Unifies Email Security and Zero Trust with Acquisition of Area 1 Security

- Astadia Joins the AWS Mainframe Modernization Service

- Avoiding Missteps When Migrating Legacy Apps to the Cloud

All rights reserved © 2025 Enterprise Integration News, Inc.