Denodo Updates its Data Virtualization Platform to Provide ‘Consolidated Business Views’

Denodo's latest upgrade to its data virtualization platform aims to let companies simply and quickly deliver unified views and consistent data across a wide variety of sources. The Denodo 7 release comes as mounting data volumes from diverse data sources make digital transformation projects more challenging. IDN talks with Denodo’s Lakshmi Randall.

by Vance McCarthy

Tags: APIs, data catalogue, data virtualization, Denodo, in-memory, metadata,

director of product marketing

"Knowing the true characteristics of a customer’s multiple data platforms lets us extract the necessary metadata. And that, in turn, lets us learn how all their data is working together."

Integration & APIs

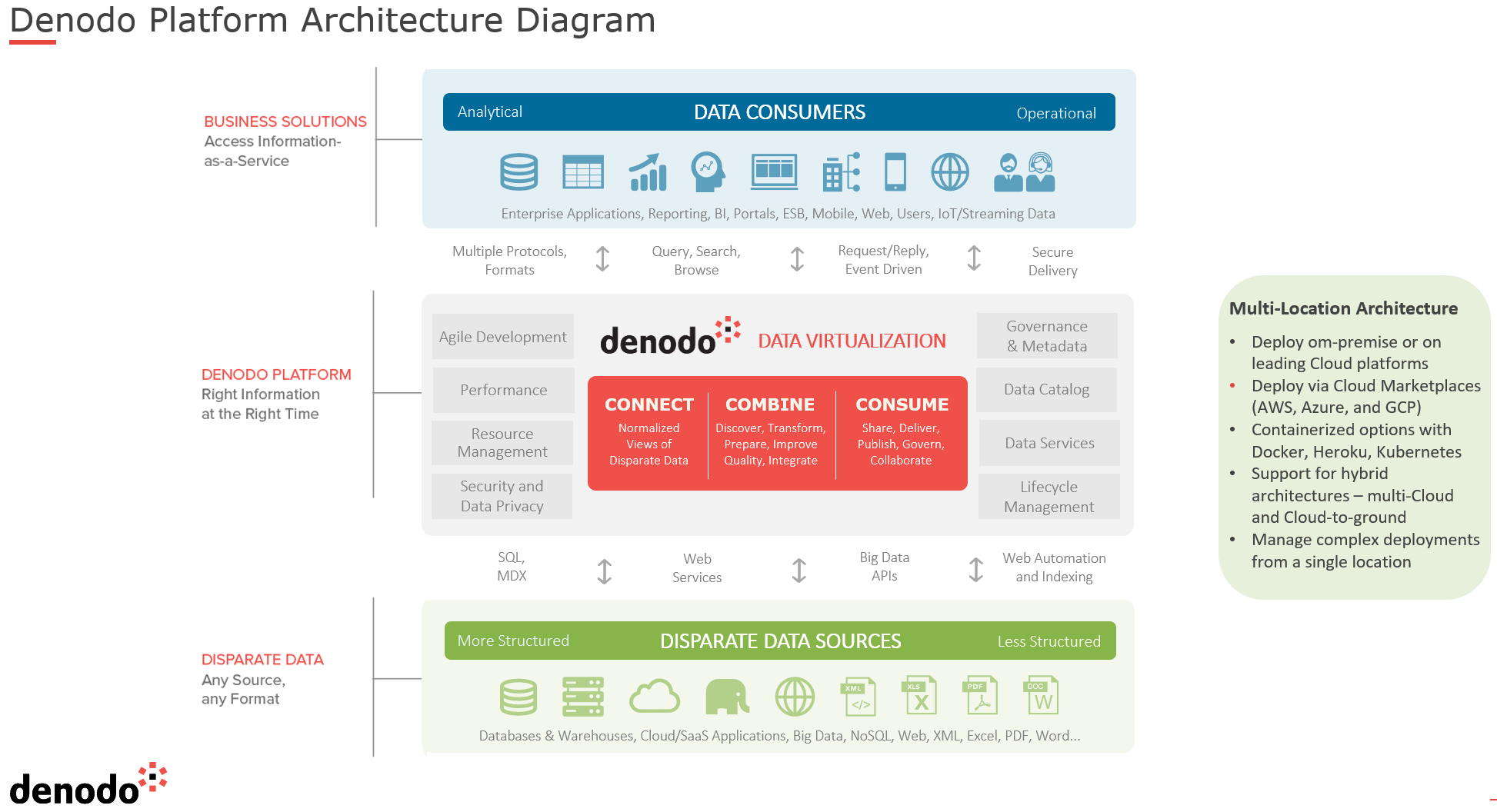

Enterprise-Grade Integration Across Cloud and On-Premise

Enterprise-Grade Integration Across Cloud and On-PremiseThe latest upgrade to the Denodo data virtualization platform aims to let companies simply and quickly deliver unified views and consistent data across a wide variety of sources. Denodo 7 comes as mounting data volumes from diverse data sources make digital transformation projects more challenging.

“Many companies working on Big Data simply don’t have a consolidated, monolithic platform approach. But they still are looking to bring so many multiple pieces [of data] together -- without a lot of complexity,” Lakshmi Randall, Denodo’s director of product marketing told IDN. The company’s Denodo 7 data virtualization aims to tackle this problem and deliver easy-to-reap benefits to both IT and business users she added.

In specific, Denodo 7.0 aims to provide fast, reliable and simpler ways to access what Randall called “consolidated business views” of data, which are unified, timely and relevant because these views are amassed from multiple and dispersed data sources.

Once users build a consolidated view within the data virtualization, that can be used by that individual user. Or they can be shared with others, as they can be published as data service, thanks to Denodo 7’s support for APIs. “This really offers flexibility in terms of how the data is provisioned to the business users,” Randall said.

Under the covers, Denodo 7.0 provides a data virtualization layer, which combines a smart approach to metadata, alongside in-memory fabric, high-speed caching and a new dynamic data catalogue. Also on-board is native support for Big Data’s most popular MPP (massively parallel processing) engines including Spark, Hive, Impala and Presto, she added.

The result is a high-speed, high-quality layer that lets users work with diverse data in real-time and dynamic ways – all without IT’s need to move data around via complex hand-coded integrations or to build and populate physical data repositories.

“The way Denodo works, we don’t replicate, copy or move data. Our data virtualization approach leaves the data in place, while providing access to data from the multiple data platforms -- regardless of where the data reside,” Randall said, who is also a former Gartner analyst. The result is what could be considered a ‘virtual data repository,’ she added.

Rather than move or replicate data, Denodo’s data virtualization platform works by collecting and analyzing metadata from a company’s varied different data platforms. “This helps us better understand the nature of the data source,” Randall explained. Then Denodo uses that metadata to apply what she called “intelligent optimization techniques” to understand the nature of the data and where it’s running.

“We’ll know if it a relational database, a database running in the cloud or even if it’s a hybrid system. Knowing the true characteristics of a customer’s multiple data platforms lets us extract the necessary metadata. And that, in turn, lets us learn how all their data is working together so we can provide the most optimal path to data.”

“We do optimizations to the data source with in-flight techniques. There is no specific [physical] repository,” she added. Rather, Denodo uses its in-memory fabric, caching, MPP and other capabilities to deliver consolidated views across diverse data – and at high performance.

Denodo’s in-memory fabric has two key benefits for users, Randall shared:

The first one is caching. She shared this example: “Let’s say you're extracting leads from a Salesforce application. And Salesforce API provides the ‘delta’ leads, so you don't necessarily have to do a full [data] extract every time. You can bring just the ‘delta’ leads into memory for analysis perspective. Denodo will just get the data from the cache instead of having to go to the source every time,” she said.

The second is for query performance. “This is especially useful where there is an extremely large volume of data, like in the terabytes. We can provide just the pieces of data you need for analysis without having to run through the entire dataset,” she said.

A Look at Denodo’s Metadata Approach – and How it Works with Other Platform Features

With metadata providing such an important part of Denodo’s recipe, we asked Randall whether any extra data prep or coding would be required by users.

“Great question! Out-of-the-box, Denodo is capable of optimizing the metadata available to it. There is also an administrative interface if users want to configure something special,” Randall said

Further, Denodo is working on added functionality it calls ‘self-learning optimization’ for metadata, Randall added. “We want to take the use of metadata to the next level, so this will automatically use learnings from the metadata to provide query optimization techniques on-the-fly.”

We asked her to guide us through some of Denodo 7’s other key technology updates:

- New and enhanced interfaces with elements for data stewards, data analysts, and business users or citizen analysts. In addition, there is a centralized UI for administrators to manage deployments and promotions, orchestrate tasks, monitor, audit, and troubleshoot.

- New “dynamic data catalog” functionality to let business users take special advantage of a new UI to discover, explore, prepare, and access data in real time via a user-friendly interface. This facilitates search and discovery of both data and metadata so users can more fully understand their data and start to personalize it to meet their needs. The feature also provides users the ability to enrich the metadata and the data, she added.

- Added best practices to govern “personalized assets” created with the catalogue. “They will be going through an approval process and a stewardship process before it gets consumed, so we enforce as well as self-service. This lets users find, the data, understand and prepare the data and make it ready for analysis and reporting,” she said.

- Native support for MPP (massively parallel processing) engines including Spark, Hive, Impala and Presto boosts performance.

- Out-of-the-box integration with external data governance and catalog tools through the Denodo Governance Bridge (DGB). This supports bidirectional metadata sharing for end-to-end data stewardship and governance.

- New tools and APIs to simplify multi-cluster deployments and promotions, license updates, and more. New capabilities help organizations realize the benefits of agile methodologies and continuous delivery philosophies.

- Denodo 7 runs atop a multi-location architecture that can support multi-cloud, hybrid and edge scenarios. It is specifically designed to provide location transparency to minimize expensive data movement between different locations, she added.

Exploding Data Sources Require New Thinking; Consolidated Data Views

Denodo 7’s capabilities and architecture reflect the need to come up with new ways to think about how to get data to where it needs to be – when it needs to be available – without undue complexity or delay in delivery, Randall said, who in the past was an analyst with Gartner. “For years, enterprises used a single monolithic data warehouse, which worked extremely well and still does for many cases,” she said. But, additional ways of working with data are required, as needs increase for real-time insights to business information, she noted.

“Business users really don't want to wait. So, we’re seeing customers are looking for more flexible and [rapid] ways to bring in new data with their enterprise data warehouse. The [data] warehouse still plays a role, but with so many new data sources, it is no longer at the forefront,” Randall said. It’s more that companies are looking to augment their data warehouse with these new sources, “so they can align their diverse data into a single picture, even in real-time,” she added.

Denodo 7’s approach to data virtualization is not simply about simplifying integration or breaking down data silos. It is about delivering business information by bringing raw data and analytics together to provide a more meaningful, global view of a company’s data, Randall said.

For business analysts and consumes of such data, Denodo provisions data to major BI dashboards, including Tableau and Microstrategy. Thanks to its on-going API support, Denodo 7 lets users create and publish data assets as a data service.

“We’ve had this functionality all along to let users leverage data services from home grown or BI tools. User can just go in and click and publish a data service and downstream users or apps can use that,” Randall said. Beyond these integration interfaces, however, Denodo 7 adds what Randall called a ‘universal semantic layer’ that renders uniform and consistent data across multiple business intelligence tools. “This means users don't have to spend time calculating data shown in different reports across different tools. Users will get consistent and common views data.”

Dave Wells, an analyst with Eckerson Group noted the benefits of Denodo’s approach. "Data virtualization provides real-time access to data. Business users do not have time to chase down data from various sources.

Beyond the core platform, Wells specifically commended Denodo 7’s latest UI updates: “With data services presented through a 'storefront' interface where data users can shop to find best-fit data to meet their needs, the pain of data seeking and data evaluation is quickly relieved."

Related:

- Tray Advantage Program To Speed, Simplify AI-Powered Automation for Enterprises

- Removing Barriers to Business by Enabling Agility & Control with Ecosystem Integration

- 98% of Enterprises Struggle To Maintain, Rebuild Integrations for Key Business Apps

- ThreatX Adds API Visibility, Protection Capabilities To Defend Against Real-Time Attacks

- Visibility and Transparency are Climbing the List of C-Suite Priorities in 2022

All rights reserved © 2025 Enterprise Integration News, Inc.